Radioactivity is a natural phenomenon that has baffled and fascinated scientists and humanity in general for more than a century.

From the discovery of natural radioactivity in the 19th century to the creation of artificial radioactive materials in the 20th century, this phenomenon has revolutionized science, medicine and industry.

Discovery of natural radioactivity

Natural radioactivity is a physical phenomenon in which certain unstable atomic nuclei emit subatomic particles and radiation in an attempt to achieve greater stability.

This process occurs spontaneously in certain chemical elements, such as uranium, thorium and potassium-40, which are found in the Earth's crust. These elements release alpha, beta and gamma particles, generating ionizing radiation. Natural radioactivity is a constant process and has been present on Earth since its formation

Becquerel's discovery

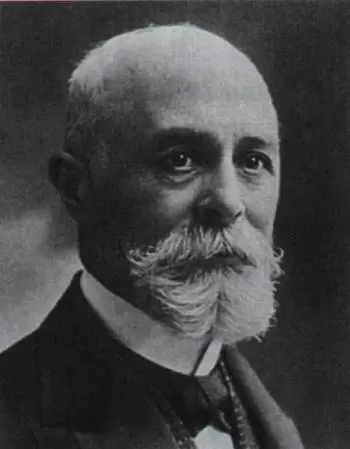

The study of natural radioactivity began with the discovery of Henri Becquerel in 1896.

While investigating the properties of uranium salt crystals, Becquerel noticed that they emitted a mysterious radiation that could pass through opaque materials and expose photographic plates.

This observation marked the birth of radioactivity, and Becquerel was awarded the Nobel Prize in Physics in 1903, along with Pierre and Marie Curie, who made important contributions to the study of radioactivity.

Marie Curie's experiments

Marie Curie, a pioneering scientist in the field of radioactivity, played a key role in understanding this phenomenon.

Marie Curie, a pioneering scientist in the field of radioactivity, played a key role in understanding this phenomenon.

In 1898, together with her husband Pierre Curie, Marie isolated two highly radioactive elements: polonium and radium. These discoveries led to the identification of radioactivity as a property of certain chemical elements, which challenged the conventional understanding of matter at the time.

The Curies' research into radioactivity led to the development of more precise measurement techniques, such as the use of the electroscope and dosimetry. They also laid the foundation for future research into the effects of radiation on human health.

Discovery of artificial radioactivity

Artificial radioactivity is the result of the deliberate creation of radioactive elements through the induction of controlled nuclear reactions in laboratories or nuclear reactors.

This is achieved by bombarding atomic nuclei with subatomic particles, such as neutrons, transforming one element into another. An example is the conversion of uranium into plutonium.

These elements do not occur naturally and are used in various applications, such as nuclear power generation, production of isotopes for medicine, and nuclear physics research.

The discovery of artificial radioactive isotopes

While researching natural radioactivity, scientists also explored the possibility of creating radioactive elements artificially.

In 1934, Italian chemist Enrico Fermi first achieved this by bombarding uranium atoms with neutrons. As a result, transuranic elements, such as neptunium and plutonium, were created. This achievement marked the beginning of artificial radioactivity.

The discovery of artificial radioactive isotopes had a significant impact on nuclear research and the creation of new technologies, including the atomic bomb and nuclear power generation. However, it also raised concerns about nuclear proliferation and the dangers associated with handling radioactive materials.

Applications of artificial radioactivity

Artificial radioactivity has had a wide range of applications in science and technology. One of the most notable uses is nuclear power generation. Nuclear power plants use nuclear fission to produce electricity efficiently and with low carbon emissions.

Artificial radioactivity has had a wide range of applications in science and technology. One of the most notable uses is nuclear power generation. Nuclear power plants use nuclear fission to produce electricity efficiently and with low carbon emissions.

Another important use of artificial radioactivity is radiotherapy in the treatment of cancer. Radiation is used to destroy cancer cells and shrink tumors, which has saved countless lives over the years.